ChatGPT-4 Turbo could be practical, scalable, and cost-effective for implementing comprehensive postdeployment monitoring of some AI tools used by radiologists.

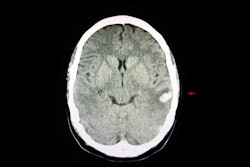

Researchers from Baylor College of Medicine and the Radiology Partners Research Institute tested automating a version of the large language model (LLM), using data from 37 Radiology Partners practices across the U.S. and looking for performance drift of Aidoc's deep-learning intracranial hemorrhage (ICH) detection system. The findings were published on August 11 in Academic Radiology.

"Traditional drift detection approaches, which rely on real-time feedback, are often impractical in healthcare settings due to delays in obtaining ground-truth data," explained corresponding author Mohammad Ghasemi-Rad, MD, and colleagues in the paper. "While recent studies emphasize the importance of regular monitoring and model updates, they offer limited practical guidance for implementation."

To address the gap, the team measured a HIPAA-compliant Microsoft Azure-hosted version of ChatGPT-4 Turbo's data extraction performance against a ground-truth dataset of 1,000 noncontrast head CT radiology reports labeled by radiologists as positive or negative ICH.

Analyzing 332,809 head CT examinations between December 2023 and May 2024 -- of which AIdoc had identified 13,569 cases as positive for ICH -- the LLM correctly identified 120 cases as true positives and 79 as true negatives, the team reported. It noted that ChatGPT-4 Turbo demonstrated high diagnostic accuracy and a 60% concordance rate with radiologist reports.

ChatGPT-4 Turbo yielded a positive predictive value of 1, a negative predictive value of 0.98, an overall accuracy of 0.995, and an area under the curve (AUC) of 0.99, according to the team.

Among the discordant cases, 3.5% represented true ICH findings correctly identified by Aidoc but missed by radiologists, 0.5% were due to extraction errors by ChatGPT, and the remainder were Aidoc overcalls.

Only one false negative occurred -- in a report describing an evolving right posterior mixed-density extra-axial fluid collection with a further decrease in the amount of increased density, the study authors noted.

The study also demonstrated that the performance of Aidoc's ICH detection algorithm varied among CT scanners. Further, Aidoc’s false positive classifications were influenced by scanner manufacturer, midline shift, mass effect, artifacts, and neurologic symptoms, Ghasemi-Rad and colleagues wrote.

Authors highlighted a 2020 survey by the American College of Radiology (ACR) that reported 30% of its members currently use AI in clinical practice, and nearly 50% plan to adopt AI solutions within the next five years. Teleradiology services, which handle high-volume caseload during off-hours, rely heavily on noncontrast head CT scans where AI-based ICH detection may be employed.

Furthermore, the paper suggested that the cost of the LLM monitor would be minimal compared to traditional manual review by radiologists or quality assurance staff.

"Despite the promise of AI, its performance is not static over time," the authors wrote. "This study underscores the importance of continuous performance monitoring for AI systems in clinical practice. Integration of LLMs offers a scalable solution for evaluating AI performance, ensuring reliable deployment, and enhancing diagnostic workflows."

Read the complete study here.