AI improves breast cancer detection accuracy among radiologists when reading mammograms and digital breast tomosynthesis (DBT) exams, according to research published July 8 in Radiology.

A team led by doctoral candidate Jessie Gommers from Radboud University Medical Center in Nijmegen, the Netherlands, found that radiologists who had AI support spent more time focusing on suspicious areas flagged by AI and less time on the rest of the breast on mammograms and DBT images.

“These insights suggest that AI can help radiologists prioritize both examinations and specific areas within an image, ultimately improving clinical performance in breast cancer screening,” Gommers told AuntMinnie.

While radiologists continue to explore ways that AI can help improve image interpretation and make workflows efficient, the researchers noted that AI recommendations could direct radiologists’ eye fixations to areas considered suspicious. This may raise concerns about automation bias, they wrote, adding that eye tracking may help study potential changes in search behavior.

Gommers and colleagues compared radiologist performance and visual search patterns when reading screening mammograms with and without an AI decision-support system (Transpara version 2.1.0, ScreenPoint Medical). They used metrics tied to screening performance and eye tracking.

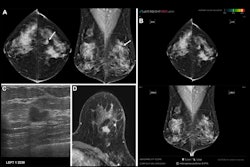

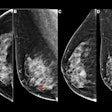

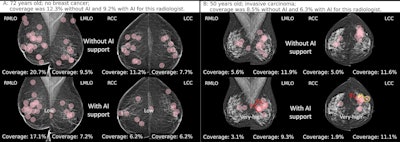

(A) Fixations (pink circles) of a radiologist while reading without and with AI support on a screening mammogram in a 72-year-old woman without breast cancer. The radiologists did not recall the woman in either reading condition. The mean breast fixation coverage for this radiologist was 12.3% without AI support and 9.2% with AI support. The AI tool classified this examination as low risk, with a maximum region score under 40. (B) Fixations of a radiologist while reading without and with AI support on a screening mammogram in a 50-year-old woman with invasive carcinoma of no special type located dorso-laterally in the left breast. The radiologist recalled this woman in both reading conditions, with a mean breast

(A) Fixations (pink circles) of a radiologist while reading without and with AI support on a screening mammogram in a 72-year-old woman without breast cancer. The radiologists did not recall the woman in either reading condition. The mean breast fixation coverage for this radiologist was 12.3% without AI support and 9.2% with AI support. The AI tool classified this examination as low risk, with a maximum region score under 40. (B) Fixations of a radiologist while reading without and with AI support on a screening mammogram in a 50-year-old woman with invasive carcinoma of no special type located dorso-laterally in the left breast. The radiologist recalled this woman in both reading conditions, with a mean breast

fixation coverage of 8.5% without AI support and 6.3% with AI support. The AI tool classified this examination as very high risk, with a maximum region score of 80 or higher (red numbers; yellow number represents intermediate risk). Diamonds indicate calcifications, and circles denote soft tissue lesions. Image courtesy of the RSNA.

The study included 12 breast screening radiologists from 10 institutions whose experience ranged from four to 32 years. It also included screening mammograms acquired between 2016 and 2019. The AI system assigned a region suspicion score ranging from 1 to 100, with the latter indicating the highest malignancy likelihood.

Final analysis included mammography exams from 150 women with a median age of 55. Of the total, 75 had breast cancer and the remaining 75 did not.

AI assistance led to significant improvements in the average area under the curve (AUC) and comparable results for sensitivity, specificity, and reading times. AI assistance also led to more time focused on lesion regions rather than the whole breast.

Comparison between AI assistance, no assistance in reading mammograms | |||

Measure (by average) | No AI assistance | AI assistance | p-value |

| AUC | 0.93 | 0.97 | < 0.001 |

| Sensitivity | 81.7% | 87.2% | 0.06 |

| Specificity | 89% | 91.1% | 0.46 |

| Reading time | 29.4 seconds | 30.8 seconds | 0.33 |

| Breast fixation coverage | 11.1% | 9.5% | 0.004 |

| Lesion fixation time | 4.4 seconds | 5.4 seconds | 0.006 |

| Time to first fixation with lesion region | 3.4 seconds | 3.8 seconds | 0.13 |

The results suggest that when AI is accurate, it can improve both detection and efficiency by helping radiologists allocate their time more effectively, Gommers said.

Despite the promising results, Gommers cautioned that it is still important for radiologists to maintain a critical mindset and not become overly reliant on AI.

“Since the presence of AI influences visual search behavior, determining the most effective moment to present AI information will be essential for safe and effective clinical implementation,” she told AuntMinnie.

She and colleagues are currently performing more reader studies to find the best timing for presenting AI support, either immediately upon opening the exams or only when requested.

“These studies also include eye-tracking to better understand how radiologists' visual search patterns are detected when the AI itself is uncertain, such as due to image quality issues or particularly complex cases for AI,” Gommers said. “This would enable more selective use of AI, ensuring it is only used in situations where it is most likely to provide value.”

In an accompanying editorial, Jeremy Wolfe, PhD, from Harvard Medical School in Boston, MA, wrote that more benefits could be obtained by using AI to triage cases.

“If the AI program could indicate that the clinician did not need to look at, for example, a quarter or a third of the cases in a screening setting, that would be a substantial time savings. [The researchers] observe that AI alone outperformed the combined efforts of readers and AI,” Wolfe wrote. “Apparently, the readers could have had more faith in this AI in this situation. Looking at the good standalone performance of AI, one can see that AI could be set to a point where it produced a 50% rate of false-positive findings while producing a 100% rate of true-positive findings. The AI would have labeled as ‘normal’ the other 50% of the normal mammograms.”

Wolfe added that while AI will not replace radiologists in at least the near future, its use will grow, and “it will be increasingly useful if we are able to answer the right set of behavioral science questions that it raises.”

The full study can be read here.