Radiologists who missed an abnormality on an image were more likely to be perceived as legally culpable if AI detected the abnormality, according to a study published May 22 in NEJM AI.

However, presenting error rates for the AI mitigated that tendency to judge radiologists more severely in the presence of dissenting AI results, wrote a team led by Grayson Baird, PhD, from Brown University in Providence, RI.

With the increased use of AI-based software assisting with diagnostics comes the concern that its use will become a factor in determining liability in court cases. Radiology researchers continue to study how the public -- and potential jurors in particular -- will perceive the role of these AI applications and their accuracy compared to that of human readers.

The Baird team determined under which conditions a jury would be more likely to side with a plaintiff in a court case when an AI application was used.

It included two study cohorts of 652 and 682 jurors, respectively, with participants viewing a vignette describing a radiologist being sued for missing either a brain bleed or cancer.

In each group, participants were randomly assigned to a subgroup of one of five conditions, four of which involved the use of an AI program: the AI agreed with the radiologist, also failing to find the abnormality (AI agree); AI found the abnormality but the radiologist did not (AI disagree); AI failed to find the abnormality and the radiologist did not, while AI was disclosed as having a false omission rate (FOR) of 1% (AI agree + FOR); AI found the abnormality but the radiologist did not, while AI was disclosed as having a false discovery rate (FDR) of 50% (AI disagree + FDR). The control subgroup had no AI involved in the diagnosis. All participants in the subgroups viewed the same vignette.

The participants then answered whether the radiologist in the vignette they viewed met the standard for duty of care. A “no” answer indicated that the participant sided with the plaintiff (the patient or patient’s family), while a “yes” meant that the participant sided with the defendant (the radiologist).

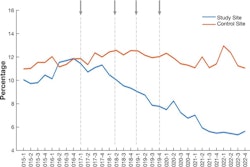

For the brain bleed vignette, members of the control group sided with the plaintiff 56.3% of the time. When AI agreed with the radiologist, participants sided with the plaintiff 50% of the time (50% vs. 56.3%, p = 0.33), suggesting that if AI also missed the pathology, it neither helped nor harmed the radiologist’s defense.

However, when AI flagged the brain bleed and the radiologist did not, the participants sided with the plaintiff 72.9% of the time. This finding showed that AI disagreeing with the radiologist hurt the defense significantly more than when no AI was used (72.9% vs. 56.3%; p = 0.01).

The inclusion of false omission and false discovery rates (FOR and FDR) showed mitigating effects on the perception of culpability. The participants in the AI agree + FOR group, where neither AI nor the radiologist identified the bleed and the 1% FOR of the AI tool was disclosed, sided with the plaintiff only 34% of the time as opposed to 50% of the time for the AI agree group, showing that including the low false omission rate helped the radiologist’s defense.

Furthermore, only 48.8% of participants in the AI disagree + FDR sided with the plaintiff, suggesting that when the AI’s FDR of 50% was disclosed, it also helped the defense (48.8% disagree + FDR vs. 72.9% disagree). However, the percentage difference between disagree + FDR and the control subgroup wasn’t significant.

For the cancer vignette, 65.2% of the control group sided with the plaintiff. Again, the agreement of AI in missing the pathology neither helped nor harmed the defendant, with 63.5% of the AI agree group siding with the plaintiff. For the AI disagree group, 78.7% of the participants sided with the plaintiff.

As with the brain bleed scenario, the inclusion of the FOR and FDR helped the defense, albeit not as significantly for lung cancer. The team reported that 56.4% of participants sided with the plaintiff in the AI agree + FOR group versus 63.5% for AI agree group only. And 73.1% of participants sided with the plaintiff in the AI disagree + FDR subgroup compared to 78.7% who disagreed.

The authors suggested that the differences in this finding between the two vignettes might lie in the participants’ subjective perception of factors such as the relative severity of the outcomes and the pathologies involved.

“The fact that we observed similar findings for AI disagree + FDR versus AI disagree and AI agree + FOR versus AI agree suggests that priming people with the notion of [stochastic] imperfection in one entity [AI] may make jurors less likely to find that radiologists breached the standard of care for perceptual errors," they wrote.

Read the study here.