Imaging and patient-level differences may exist between AI and radiologists for false-positive digital breast tomosynthesis (DBT) exams, according to research published October 1 in the American Journal of Roentgenology.

A team led by Tara Shahrvini, MD, and Erika Wood, MD, from the University of California, Los Angeles, reported that among their findings, AI-only flagged findings on DBT were most commonly benign calcifications, while radiologist-only flagged findings were most commonly masses.

“The findings may help guide strategies for using AI to improve DBT recall specificity,” the research team wrote. “In particular, concordant findings may represent an enriched subset of actionable abnormalities.”

AI tools continue to show their potential for streamlining workflows and boosting screening accuracy. But many radiologists may feel skeptical about their clinical utility. The researchers also noted a lack of data directly comparing individual false-positive imaging findings flagged by AI with findings flagged by radiologists.

“Data addressing these gaps will be critical to inform how AI may be used to reduce false-positive recalls and thereby improve screening outcomes,” the authors wrote.

Shahrvini, Wood, and colleagues compared how AI and radiologists characterize false-positive DBT exams in a breast cancer screening population via a study that included data from 2,977 women with an average age of 58 who underwent 3,183 screening DBT exams between 2013 and 2017. The researchers used a commercial AI tool (Transpara v1.7.1, Screenpoint Medical) to analyze DBT exams.

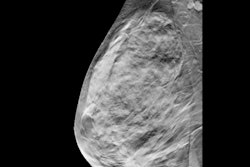

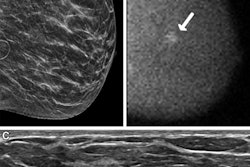

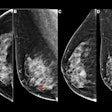

Examples of AI-only flagged findings corresponding with benign calcifications. (A to C) Synthetic views from false-positive screening DBT examinations in three different patients, including dystrophic round calcifications in 63-year-old patient (A), skin calcifications in 49-year-old patient (B), and vascular calcifications in 71-year-old patient (C). No patient was diagnosed with breast cancer within one year after screening examination.ARRS

Examples of AI-only flagged findings corresponding with benign calcifications. (A to C) Synthetic views from false-positive screening DBT examinations in three different patients, including dystrophic round calcifications in 63-year-old patient (A), skin calcifications in 49-year-old patient (B), and vascular calcifications in 71-year-old patient (C). No patient was diagnosed with breast cancer within one year after screening examination.ARRS

The team defined positive exams as an elevated-risk result for AI and as BI-RADS category 0 for interpreting radiologists. It also defined false-positive exams as the absence of a breast cancer diagnosis within one year.

AI and radiologists had false-positive rates of 9.7% and 9.5%, respectively. Of the 541 total false-positive exams, 233 (43%) were false positives for AI only, 237 (44%) for radiologists only, and 71 (13%) for both.

Patient-level differences between AI-only, radiologist-only false positive DBT exams | |||

Patient-level characteristic | Radiologist-only | AI-only | p-value |

Average patient age | 52 years | 60 years | < 0.001 |

Frequency of dense breasts | 57% | 24% | < 0.001 |

Frequency of a personal history of breast cancer | 4% | 13% | < 0.001 |

Frequency of prior breast imaging studies | 78% | 95% | < 0.001 |

Frequency of prior breast surgical procedures | 11% | 37% | < 0.001 |

The false-positive exams included 932 AI-only flagged findings, 315 radiologist-only flagged findings, and 49 flagged findings consistent between AI and radiologists.

The following are the most common imaging findings flagged by AI: benign calcifications (40%), asymmetries (13%), and benign postsurgical change (12%). Findings flagged most by radiologists included the following: masses (47%), asymmetries (19%), and indeterminate calcifications (15%).

Finally, of 18 concordant flagged findings that needed biopsy, 44% resulted in high-risk lesions.

The authors highlighted that the differences observed in these results “may inform future research on the optimization of AI to support screening specificity.”

“The relatively greater number of AI-flagged findings per examination raises concerns about automation bias from AI use that could increase rather than reduce workload,” they wrote. “Although only a small fraction of false-positive examinations overlapped between AI and radiologists, concordant flagged findings may represent an enriched subset of actionable abnormalities.”

Read the full study here.