A head-to-head comparison of three commercially available AI tools for detecting fractures on x-rays has revealed the good and the bad in each model, researchers have reported.

While all three models showed moderate to high performance for straightforward fracture detection, they had limited accuracy for complex scenarios such as multiple fractures and dislocations, noted lead author Ina Luiken, MD, of TUM University in Munich, Germany, and colleagues.

“This suggests that current algorithms are not yet robust enough for stand-alone diagnosis and should primarily assist, rather than replace, radiologists and reporting radiographers,” the group wrote. The study was published October 7 in Radiography.

Despite the demonstrated potential of AI models in controlled research environments, significant questions remain regarding the translation of AI into routine clinical practice, the researchers noted.

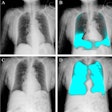

To bridge the knowledge gap, the group prospectively evaluated three commercially available models -- BoneView (Gleamer, Paris, France), Rayvolve (AZmed, Paris, France), and RBfracture (Radiobotics, Copenhagen, Denmark) -- on x-rays from 1,037 adult patients covering 22 anatomical regions. Fractures were present in 29.6% of patients; 13.7% had acute fractures and 6.7% had multiple fractures.

The results from the AI models for detecting fractures (“none,” “suspicion,” or “yes”) were manually compared against verified radiology reports or a diagnostically clarifying CT report, and the researchers calculated area under the operating curve (AUC), sensitivity, and specificity for each model.

According to the results, Rayvolve generally achieved higher sensitivity, BoneView offered balanced performance, and RBFracture prioritized specificity, the researchers reported.

Performance of three AI models in detecting all fractures | |||

Measure | Rayvolve | BoneView | RBFracture |

| AUC | 84.9% | 84% | 77.2% |

| Sensitivity | 79.5% | 75.6% | 60.9% |

| Specificity | 90.3% | 92.3% | 93.6% |

For acute fractures, AUCs were comparable (range: 84.8% to 87.8%). However, for multiple fractures, performance was limited, with AUCs ranging from 64.2% to 73.4%. In addition, Rayvolve had a higher AUC for dislocation (61.9% vs. 54.5% for Gleamer), and Gleamer and Radiobotics outperformed AZmed for effusion (AUCs of 69.6% and 73.6% vs. 58%). Finally, no algorithm exceeded 91% accuracy for acute fractures, the researchers reported.

“Commercial AI algorithms showed moderate to high performance for straightforward fracture detection but limited accuracy for complex scenarios such as multiple fractures and dislocations,” the authors noted.

Ultimately, the findings have significant implications for integrating the models into clinical practice, according to Luiken and colleagues.

Specifically, Rayvolve's high sensitivity for all fractures and fractures with present osteosynthesis material makes it a promising tool for initial screening, Boneview's balanced performance supports its use as a second-reader tool to confirm negative findings, and RBFracture's high specificity is valuable for ruling out fractures, they suggested.

“Current tools should be used as adjuncts rather than replacements for radiologists and reporting radiographers," the team concluded. "Multicenter validation and more diverse training data are necessary to improve generalizability and robustness."

The full study is available here.