A group in South Korea has validated an AI model for generating chest x-ray reports in an experiment involving three clinical contexts, with more than 85% of the reports deemed acceptable, according to a study published September 23 in Radiology.

The model’s reports had similar acceptability to radiologist-written reports as determined by a panel of thoracic radiologists, wrote lead author Eui Jin Hwang, MD, PhD, of Seoul National University Hospital, and colleagues.

“AI-generated reports are often evaluated against reference standard reports. In this study, instead of preparing reference standard reports, seven thoracic radiologists independently assessed the AI reports for clinical acceptability,” the group noted.

Using AI models designed to generate free-text reports from x-rays is a promising application, yet these models require rigorous evaluation, the researchers explained. To that end, they tested a model (KARA-CXR, version 1.0.0.3, Soombit.ai) that was trained on 8.8 million chest x-ray reports from patients older than 15 years across 42 institutions in South Korea and the U.S. The model integrates an anomaly detection classifier with a caption generator for formulating reports.

Seven thoracic radiologists evaluated the reports' acceptability based on a standard criterion (acceptable without revision or with minor revision) and a stringent criterion (acceptable without revision). In addition, they surveyed the radiologists on the potential for the model to substitute for human clinicians.

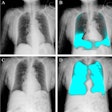

Example of an acceptable AI–generated chest x-ray report. (A) Anteroposterior chest x-ray in a 68-year-old female patient who visited the emergency department because of acute-onset dyspnea shows an enlarged heart, bilateral pleural effusion, and bilateral interstitial thickening, suggesting heart failure and interstitial pulmonary edema. (B) The AI-generated report appropriately describes the findings of the x-ray and suggests a possible diagnosis. All seven thoracic radiologists evaluated the AI-generated report as acceptable without revision. RSNA

Example of an acceptable AI–generated chest x-ray report. (A) Anteroposterior chest x-ray in a 68-year-old female patient who visited the emergency department because of acute-onset dyspnea shows an enlarged heart, bilateral pleural effusion, and bilateral interstitial thickening, suggesting heart failure and interstitial pulmonary edema. (B) The AI-generated report appropriately describes the findings of the x-ray and suggests a possible diagnosis. All seven thoracic radiologists evaluated the AI-generated report as acceptable without revision. RSNA

In addition, compared with radiologist-written reports, AI-generated reports identified x-rays with referable abnormalities with greater sensitivity (81.2% versus 59.4%; p < 0.001), but lower specificity (81% versus 93.6%; p < 0.001). Lastly, in the survey, most radiologists indicated that AI-generated reports were not yet reliable enough to replace radiologist-written reports.

“These results indicate that although the AI algorithm meets basic reporting standards, it may fall short of higher quality standards,” the researchers wrote.

In an accompanying editorial, Chen Jiang Wu, MD, PhD, of the First Affiliated Hospital of Nanjing Medical University in China and Joon Beom Seo, MD, of the University of Ulsan College of Medicine in Seoul wrote that AI models that perform at this level are positioned diagnostically between residents and board-certified radiologists, and thus offer practical benefits.

“The results of the study by Hwang et al highlight the capacity of an AI model to expedite reporting and uphold foundational quality in constrained or urgent settings,” they wrote.

With robust safeguards – including safety protocols, regulatory adherence, standardized appraisals, pragmatic deployment strategies, and advancements in multimodal large language models – AI stands to optimize efficiency, alleviate workloads in strained systems, and advance global health care equity, Wu and Seo suggested.

“The research and application of generative AI in radiology is in a period of rapid growth," they concluded. "Let us look forward to the future."

The full study is available here.