In a recent interview, visual attention research expert Jeremy Wolfe, MD, of Harvard Medical School in Cambridge, MA, discussed an emerging trend of integrating eye gaze data from radiologists into AI algorithms.

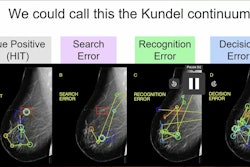

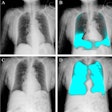

The approach has met with early success in mammography and x-ray models, and with more development, it could help reduce perceptual errors (“misses”), as well as help solve the problem of labeling images, the research suggests. More importantly, Wolfe noted, it could make AI a more collaborative partner.

“When you're feeding the human data into the model, what you're really trying to do, I think, is to make that model in a sense more human, to make it a better partner for another human doing the task,” Wolfe said.

Wolfe, who recently penned an editorial on the subject of AI and behavioral research, is a radiologist and ophthalmologist and heads the Visual Attention Lab at Harvard. He explained that efforts to integrate eye movements of radiologists into AI aren’t about training models to identify just the troubling spots on images, but to essentially learn what’s behind the eye movements themselves.

And while training AI to learn how to scan an image in a similar way to radiologists could raise fears that AI will eventually replace radiologists, we’re not likely to reach that point, Wolfe said.

“There's way too many images out there. If the AI companion can help you with that, great. I don't think the radiologists need to worry about switching careers just yet,” he said.

AI trained on eye movements could, in fact, be a boon for the problem of labeling images.